About 4 years ago I moved to London and began working at a small startup called Goodlord. We had about 14 engineers when I joined. One of the main reasons for joining the team was that in addition to being incredibly talented, they also had a JVM language on the backend. At the time I had never heard of their backend language but I felt the need to gain exposure to the JVM and its ecosystem in order to become a better engineer and as a foray into backend engineering.

The language they were using was Scala. A fairly flexible language at the crossroads between Java and Haskell, that can support both Object Oriented Programming and Functional Programming.

Learning Scala

My main goal at the company was to shift from frontend development to backend development, and in order to do this I was going to learn Scala and show the team how great I would be at it. Although I didn’t know it at the time, one of the criticisms you often hear about Scala is that it is complex, hard to understand, and hard to learn.

Today, I understand why critics think this is the case but I don’t entirely agree. As opposed to some programming languages such as Java which has an ad hoc building block for your every need, Scala has a few very building blocks. The tools which Scala provides are few but they are very powerful and cleverly designed to fit well together. If it is any indication, the latest version of book Programming in Scala has 668 pages and the latest version of Java The Complete Reference is 1248 pages long.

Scala is considered a powerful language. This means that it gives the programmer more control than other languages do, and it allows programmers to express a lot with only a little. An example of a powerful feature is the type level programming that Scala allows at the compiler level. It allows the programmer to prove to the compiler that his program is correct.

I think that learning difficulties related to Scala aren’t necessarily to do with the language itself, but rather the new concepts and use cases the language is useful for. Working on an enterprise Scala program with no prior Functional Programming knowledge is akin to working on an enterprise Java program with no prior Object Oriented Programming knowledge. You would fail to recognise that the code you are seeing contains implementations of formalized and easily identifiable design patterns.

Scala is elegant and stands on its own. Nut without a doubt, a major benefit programmers would get from learning it is the plethora of Functional Programming concepts they would pick up on along the way.

While Scala newcomers are often recommended to begin learning using Functional Programming in Scala (aka. “the red rook”) I don’t think this is a great place to start. The red book is a masterpiece but it is a difficult and academic read. I would instead recommend the less rigorous but still excellent Functional Programming Simplified book, only to then go onto the series of articles The Neophyte’s Guide To Scala, and finally onto Scala With Cats.

Practicing Scala

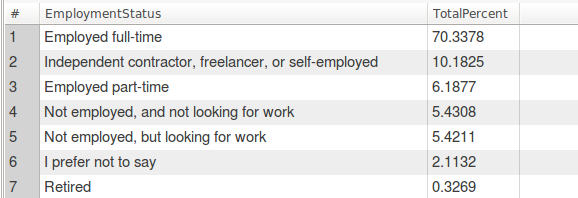

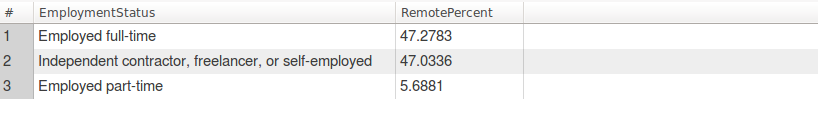

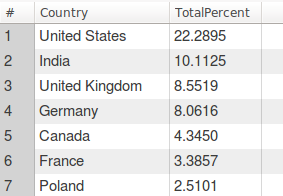

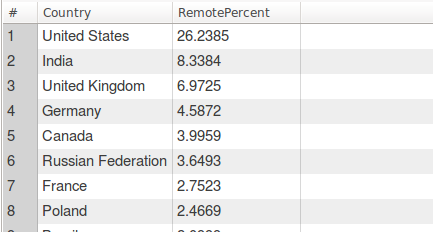

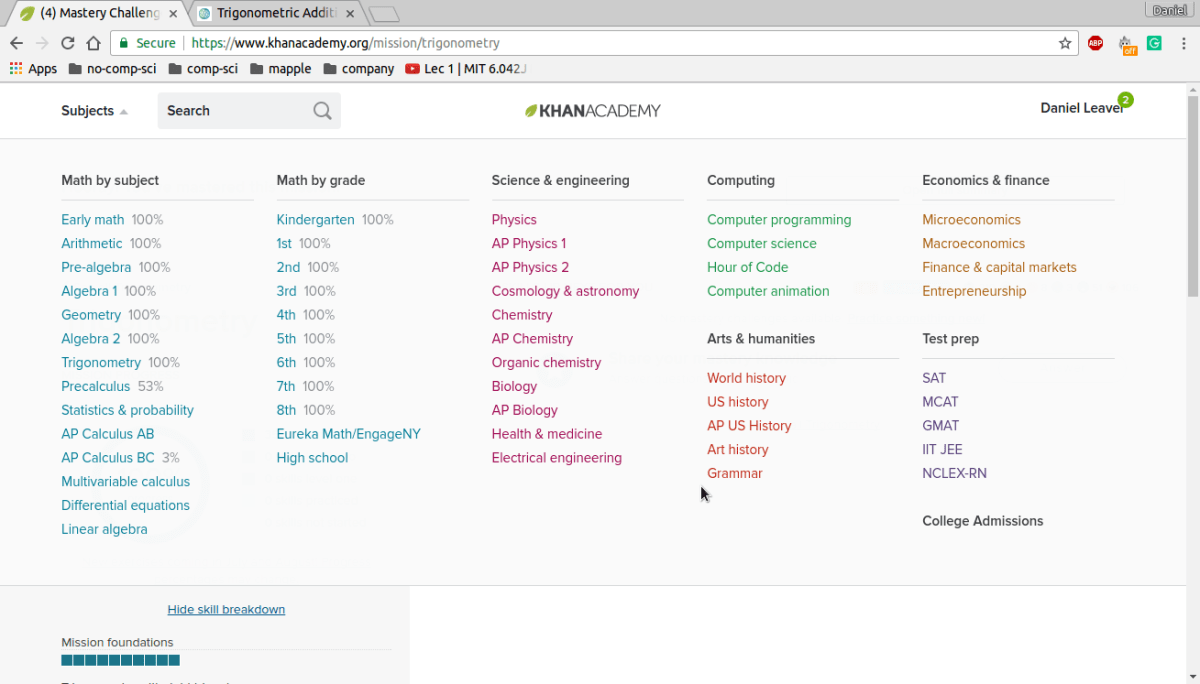

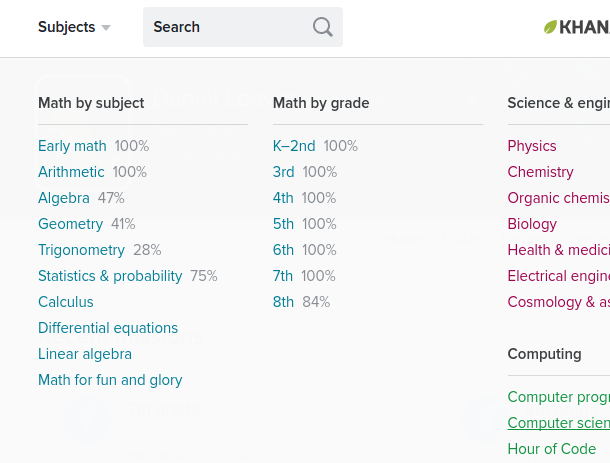

Scala represents a very small slice of the industry. The StackOverflow 2021 Survey results showed that Scala programmers represent about 3% of the entire industry. All the while JavaScript is the most widely represented at 65% and Java sits at 36%.

Scala in itself can be considered a niche. But even within the Scala community there are two broad use cases which tend to not cross boundaries. There are those using the language for running Spark jobs, and those using the language for backend microservices services.

While I do not have industry experience with Spark, I have read Spark the Definitive Guide. Spark is a framework which is part of the Apache foundation, that helps companies interact with amounts of data which are too big to fit onto a single computer.

Spark is fairly similar to MapReduce, except that it is considered an improvement on the latter because it is significantly faster. It achieves the speed gains over its competitor by mostly working in memory and saving to disk only when its job’s outputs need to be redistributed among the workers, while MapReduce will save each step of its calculations to disk.

Spark’s backend is written in Scala. Today, it provides clients in many different languages. But originally, it had only a few languages to pick from which included Scala. The JVM client is still the fastest and most powerful one to date as it allows access to lower level constructs than its other clients do.

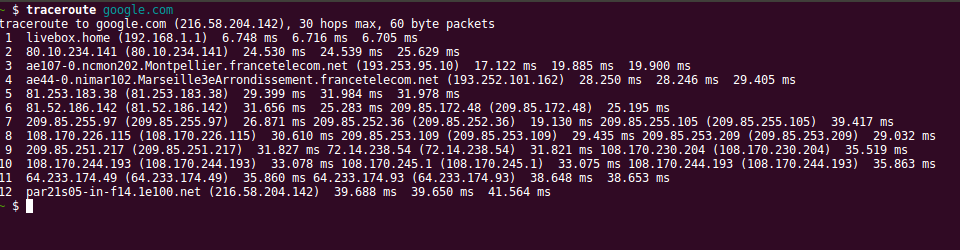

The other niche Scala has found is for backend development. Teams like the ones I worked in at Goodlord and Babylon, sometimes opt for the language for writing their microservices. In virtue of running on the JVM the language has a fantastic runtime to work on with very good tooling, and Scala programmers can also import with Java libraries without difficulty.

The initial enthusiasm for using Scala on the backend had a lot to do with the Lightbend toolkit. Lightbend provides the Akka toolkit which equips programmers with powerful capabilities to create distributed applications. Akka is to Scala engineers vaguely what Spring Boot is to Java engineers. The most famous features it provides are the Actor System, Streams, and HTTP routing functionality. The Actor System is a particularly interesting way of doing concurrency where each actor has its own non-sharable mutable state, and where actors will communicate via messages and queues.

In recent years, programmers have increasingly decided to embrace a purer form of Functional Programming by going with either TypeLevel or Zio. These are competing libraries that expose the data structures and abstractions to have greater control over side effects. Users will be familiar with their respective IO monad, their HTTP routing, and their streaming features. The IO monad is inspired from Haskell and is a fundamentally sound way of controlling side effects in code such as sending requests over the internet, and reading from the file system.

Closing Thoughts

Learning and working in Scala has been an eye opening and rewarding process for me. It has opened my eyes to the realm of functional programming and to the other wonderful languages which exist out there such as Haskell, Lisp, OCaml, Erlang, and Smalltalk.

I truly wished that more of the industry knew about and embraced Scala. But it doesn’t.

Yet, it is pleasing and optimistic to see the trend that Functional Programming languages are becoming mainstream and also that other mainstream languages are borrowing concepts from Functional Programming.

In the future, I will have to make a choice as to whether I want to allow my love of Scala to govern my career although it might narrow my possibilities, or whether I should be more open minded about trying other languages.

I think it is a choice that practitioners of all beloved yet niche technologies have to face. Should I chose to continue with Scala, then I would at least have the comfort of knowing that I am in good company.